Introduction to the Science of Sound

One of the things that draws me to audio production is the science of sound. When I first started out, my manager at the time kept banging on about the ‘sound sphere’ and how everything had to fit seamlessly within it. It was like this mystical universe that I had to learn about in order to make the perfect mix. So, for years I’ve had my head down trying to figure out what he was talking about. Mainly by trial and error and experimentation.

Sound, as we hear it is a balance between the physics of vibrations and the way our ears and brains perceive the audio we’re hearing. To try an understand the science of sound, and what its impact is on audio production, let’s take a deep dive, attempt to unpack some of it’s complexities, and explain the role it plays. But brace yourself, coz we might need to go beyond the technical stuff; it’s about understanding the soul of sound, how it resonates with us, and the different ways it impacts audio production. Wow, I went deep early.

Advanced Acoustics and Sound Wave Physics

Complex Waveforms

The first thing Walter White (assuming he was subbing for physics) would tell you in a class about sound science is you need to understand what’s going on in complex waveforms. Unlike simple sine waves, complex waveforms are a combination of various frequencies, each adding fullness, richness and awesomeness to the sound. In audio production, knowing a bit about the waveforms is a plus – they form the basis of every little sound we create.

In the synth world, complex waveforms include square waves and sawtooth waves, and in the real world – music, speech and more. From the warm hum of a bass guitar to the crisp rustle of leaves, each sound is a complex waveform. One way to understand complex waveforms is through Fourier analysis, which breaks down a complex waveform into sine waves.

Harmonic and Inharmonic Tones

Once you’ve got your head around waveforms, working out the difference between harmonic and inharmonic tones opens a whole new door to manipulating sound. Harmonic tones, involve a bit more maths, their frequencies are integer multiples of a fundamental frequency. Imagine you have a guitar string. When you pluck it, it makes a certain note. This note is the “fundamental frequency” – it’s the basic sound the string makes.

Now, along with this main note, the string also vibrates in smaller segments, creating additional, higher notes. These extra notes are like echoes of the main note, but they are higher in pitch. The “integer multiples” part means that these higher notes are not just random; they follow a pattern. Each of these higher notes is exactly two times, three times, four times (etc) the pitch of the main note. These extra notes blend with the main note to make the sound richer – oh hello harmonics!

Psychoacoustics: Perception of Sound

Perceptual Properties

OK, next up is Psychoacoustics! No, it’s nothing to do with psycho’s with acoustic guitars!

Psychoacoustics is the study of how we perceive sound. It combines elements of psychology and acoustics. How do your tiny human ears perceive pitch, loudness, and timbre? It dictates not just what we hear, but how we feel about what we hear. Ooh! For instance, two sounds with the same physical loudness can be perceived as having different loudness levels.

Pitch allows us to know the difference between melody and harmony, loudness impacts the intensity and impact of a sound, and timbre adds colour and character. The best audio producers, music producers and sound designs juggle all these things, creating audio that evokes emotions and reactions. So, psychoacoustics is half storytelling, science.

Auditory Illusions

Think of auditory illusions like magic tricks for your ears. One example is the Shepard Tone. It’s a sound that seems to keep going higher and higher (or lower and lower) forever, but it never actually gets to the top or bottom. It’s like a never-ending staircase for your ears. This kind of sound trick is really interesting because it shows how complex and amazing our hearing is.

Our ears and brain work together to make sense of sounds, and sometimes they can be fooled, just like our eyes can be tricked by optical illusions. When you’re creating sounds for movies, music, or video games, these auditory illusions come in handy, because they can make the sounds more engaging and can create a sense of never-ending motion or change.

One of the pioneers of EDM Jean-Michel Jarre used the Shepard tone in his song “Oxygène (Part II)”. It’s also used in video game sound design to create an uneasy feeling or tension, and Hans Zimmer is a fan of the Shepard Tone in many of his epic movie scores.

Digital Audio Deep Dive

High-Resolution Audio

There’s always a lot of talk about high-resolution audio compared to standard resolution and what the real benefits are. What does high-res audio really means? It’s all about sample rates and bit depth, but how much of these enhancements the human ear can actually hear?

High-resolution audio means files that have a higher sample rate and bit depth than standard CD quality, which is 16-bit depth with a 44.1 kHz sample rate. High-resolution audio can go up to 24-bit and 96 kHz or even 192 kHz. Higher sample rates mean the audio signal is captured more frequently, which gives you more detail. Higher bit depth means more dynamic range.

The human ear’s perception of high-resolution audio versus standard audio can vary greatly depending on your hearing and the device the audio is being played back on. It’s an ongoing debate among audiophiles and sound engineers, but ultimately relies on each person’s tiny ear hold. Some people say they hear a big improvement, others don’t notice much difference at all.

Basically, you if good hearing, high-res audio sounds better.

Digital Signal Processing (DSP)

Digital Signal Processing has transformed audio production, allowing for intricate changes in frequency, pitch, space, etc. Effects like reverb, delay, distortion, and compression, which were traditionally created using physical devices or specific recording techniques, are now made with digital plug ins. Now, you can basically do anything to enhance or change the original recording.

DSP has revolutionised audio restoration. It enables the removal of noise, clicks, and other unwanted artifacts from recordings, a process that was once nearly impossible with analog gear. It’s made audio production more versatile, efficient, and creative. From home studios to professional recording set ups, Digital Signal Processing has been a game changer.

>>> Check out the world of Digital Signal Processing FX here <<<

Dynamics and Frequency Processing Science

Advanced Compression Techniques

Dynamic range compression is one of the basics of audio production, but to master it you need to know your way around the technical stuff, while balancing that with a creative vision. Compression’s main job is to reduce the difference between the loudest and softest parts of an audio signal, squashing the signal to give a more consistent sound.

But it can also be used in more advanced ways, like with side-chain compression – used heavily in EDM to create that classic “pumping” effect on beats. The kick drum triggers compression on a bass line or a whole track, creating a rhythmic variation in volume that adds a groove to the song.

Multiband compression is the next level. Here, producers can apply different settings for different frequency bands. It’s especially useful in mastering, where it fine-tunes the dynamics of a final mix. Low-end frequencies can be compressed more heavily to add punch and tightness, while the high-end can be treated more gently to retain clarity and sparkle.

Equalisation Science

EQ is more than just little adjustments to frequencies, it’s shaping the tone of the sound.

Parametric EQs offer the most control, by selecting specific frequencies to boost or cut, adjusting bandwidth or Q (how wide the affected frequency range is), and sometimes phase. Graphic EQs, divide the frequency spectrum into fixed bands, They give you less precision, but are easy for broader adjustments. Shelf EQs boost or cut all frequencies above or below a certain point.

EQ can affect the phase in audio. Phase refers to the timing of a sound wave’s cycle, and EQ adjustments can change these cycles. This phase shift can lead to subtle changes in the sound’s timbre and spatial characteristics. In some cases, this is sounds great, adding colour or character to the sound. In others, phase shifts can lead to issues like muddiness or a lack of clarity.

Advanced EQ techniques, such as linear-phase EQ, are designed to minimize these phase shifts, preserving the original sonic characteristics of the audio while still allowing for tonal adjustments. Linear-phase EQs are particularly useful in mastering, where you really need to maintain the integrity of the mix’s phase.

>>> Learn more about EQ and Compression here <<<

Spatial Audio and Psychoacoustic Modeling

3D Audio Techniques

You may have noticed some of your favourite artists uploading spatial audio versions of their songs and wondered, what the hell is that? Spacial audio involves three-dimensional sound, so it becomes completely immersive and is almost like what you hear in the real world.

Binaural recording is one 3D technique. You get two microphones and create a recording that gives listeners a 3D stereo sensation. It mimics the way your ears work, receiving sound from different directions, and it’s most realistic when you’re listening through headphones. Imagine you are sticking the listener in a room and the sound is coming at them from all directions.

Ambisonics takes the concept even further by capturing sound from all around, but then allowing playback on multiple speakers. So now you’re in the middle of a sound sphere, and the audio adjusts realistically every time you turn your head. .

Dolby Atmos is a version of spatial audio most people have heard of. Dolby uses overhead speakers and a bad ass sound system to create an immersive audio experience. It’s what you’re hearing when you’re rocking back in your recliner at the cinema.

Psychoacoustic Modeling

Psychoacoustic modelling is a cool area of audio. Our brains are tricked into perceiving space and depth, even when it’s not really there. Imagine putting on a pair of headphones and feeling as though you’re sitting in a concert hall, with music coming from all around you.

Here, we’re talking virtual surround sound systems and headphones. This high end gear can manipulate audio so your ears and brain are convinced the sound is coming from different directions, creating a surround sound experience without needing multiple speakers.

Psychoacoustics are really useful in video games and virtual reality, since you want to create a realistic audio environment that someone can fully believe and be part of. For example, when you hear footsteps behind you, it makes you want to turn around to look who’s there. Psychoacoustic modelling helps create these soundscapes, adding a whole lot of depth and realism.

Temporal Perception in Audio

Time and Rhythm Perception

Time and rhythm are like the heartbeat of music, setting its pace and flow. How we perceive these elements in a song plays a big part in how we feel about the music. Think about how a fast-paced song can pump you up or how a slow rhythm can calm you down.

Take tempo rubato, for instance. It’s an Italian term that means “stolen time.” In music, it refers to the subtle speeding up and slowing down of tempo, giving a song an expressive, almost conversational quality. It’s like how in a heartfelt chat, sometimes we rush our words in excitement or slow down for emphasis. Tempo rubato in music works similarly.

Then there’s syncopation – that unexpected beat that makes you want to tap your foot or dance. It’s all about playing around with where we expect the beat to be. This off-beat rhythm creates a sense of surprise and excitement in a song, keeping it lively and dynamic. Rhythmic illusions are another trick up a musician’s sleeve. They play with our perception, making us hear rhythms and beats that aren’t really there, or in ways we wouldn’t expect.

Delay and Reverb Science

Delay and reverb are more than just special effects in audio production; they’re like echoes of space and time. If you’re in a large cathedral and clap your hands – the way the sound lingers and bounces around the room is reverb. Or think of shouting across a valley and hearing your voice echo back – that’s delay. Both give a real sense of space to a recording.

Reverb is all about the ambiance. It’s the natural reverberation of sound in a space, like a hall, a bathroom, or a cave. In audio production, reverb is used to give a sense of space to a sound. It can make a voice or instrument sound like it’s in a big hall, a cozy room, or an open field. The emotional impact of reverb is significant – it can make a song feel intimate, grand, or even eerie.

Delay, on the other hand, is about repetition of sound over time. It’s like an echo effect, where a sound repeats itself after a short interval. This can create a sense of rhythm and layering in music. Think of a guitarist using a delay pedal to create a cascading series of notes, or a vocalist whose voice is layered on top of itself for a haunting effect.

Advanced Spectral Analysis

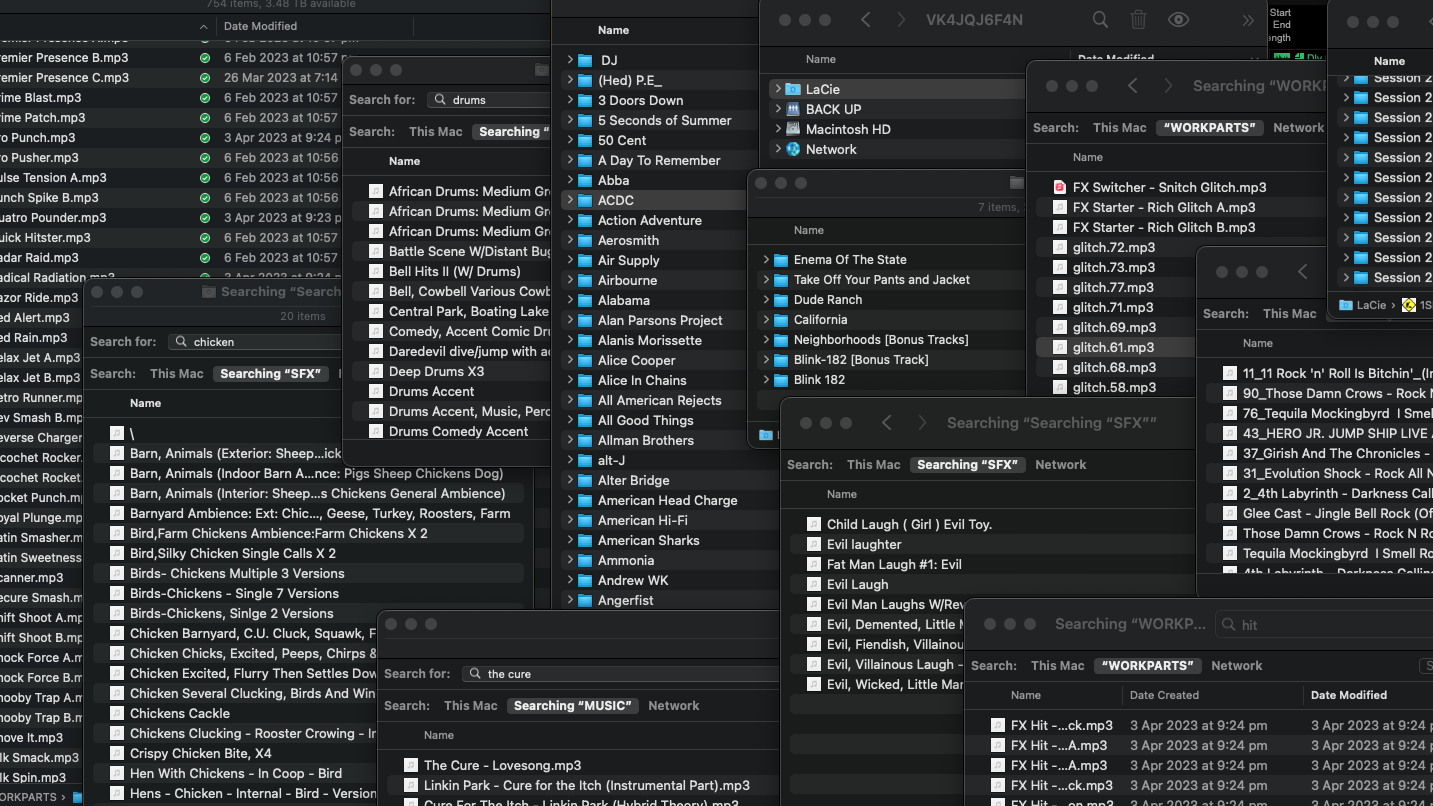

Spectral Analysis in Practice

Spectral analysis is like having a high-powered magnifying glass for sound, allowing audio producers to see the detailed makeup of a sound across its frequency spectrum. Imagine a sound as a rainbow of colours, each colour representing a different pitch or tone. Spectral analysis lays out this “sound rainbow” visually (above), showing which parts are bright and vibrant (loud or dominant frequencies) and which are faint (quieter frequencies).

It’s really useful for identifying spots in your mix where there is some frequency masking, where one sound is drowning out another. It’s like noticing that a certain colour is overpowering the rest in a painting. Producers use this insight to tweak the sound, so each frequency has its own space and shines through clearly. So you might wind out the bottom end in a guitar and pan it, so it’s in a different frequency range and also in a different space in the mix.

Harmonics Analysis

Harmonics analysis focusses on the unique characteristics each sound has that give it its timbre. Every musical note we hear is actually a mix of the main pitch (fundamental frequency) and several other higher pitches called harmonics. By manipulating harmonics, producers can change the texture and colour of a sound, making it warmer, brighter, richer, or more piercing. It helps with anything from creating a sound for a video game to making a guitar riff stand out in a song.

The Impact of AI and Machine Learning

AI in Sound Analysis

Artificial intelligence is shaking up the science of sound. Things like automatic transcription. It isn’t just about jotting down words; it’s about analysing the sound – identifying nuances of speech, pitch of notes, or the unique characteristics of instruments. AI can listen to a piece of music and break down every element – rhythm, melody and timbre of any instrument in seconds.

AI sound classification categorises the DNA of different sounds. It knows every song and sound effect in your library and can also tell you the genre, artist, whatever instantly. It’s a giant catalogue of sounds and songs, so the AI knows how to make a guitar strum different from a piano chord, or a rock song different from a symphony.

And let’s not forget predictive algorithms. This is where AI gets really sciency, analyzing trends and patterns in music to predict what’s going to be the next big hit, or what kind of sound might fit perfectly in your next production. It’s like having a music scientist who understands the physics of sound and the psychology of listening to create the perfect song.

Machine Learning Applications

Machine learning is all about teaching computers to understand, interpret, and even create music and audio on their own. Using things like, algorithmic composition AI is making all those songs you’re hearing on TikTok with Kurt Cobain singing Kanye West songs. It’s all made from learnt patterns. You can feed the computer a bunch of Beatles tracks, and it starts creating new songs that have a similar vibe. This isn’t just sci-fi stuff; it’s happening now.

Then there’s adaptive audio in video games, where machine learning changes the music and sound effects in a game depending on what the player is doing. It’s like the game is composing its own soundtrack on the fly, making the gaming experience way more immersive. If you’re sneaking around a corner, the music might get more tense; if you’re winning a race, it could become more upbeat. The game literally listens and reacts to your moves.

Automated mixing and mastering is where AI fine-tunes a song, balancing levels, equalising frequencies, etc. It’s like having a robot sound engineer who’s learned from the best in the world. For producers, this can mean faster workflows and maybe even some new creative options.

>>> the Best Free Audio Editing Programs – AI Tops the List <<<

Future Directions in Sound Science

Speculation and Predictions

The future of sound and audio will most probably be like stepping into a sci-fi movie, where the boundaries of what we think is possible are constantly being pushed. AI and technology is developing so fat, imagine what is around the corner?

Quantum computing is going to be a game-changer in audio processing. Massive amounts of data can be managed at speeds we can hardly imagine now. So, what does this mean for sound? Imagine being able to process and analyse audio in seconds? It could mean breakthroughs in sound quality, making recordings even more lifelike, so it feels like Taylor Swift is actually in your house. Might even lead to new forms of sound that we’ve never heard before.

Then, there’s the development of new sound synthesis techniques. We’re not just talking about making new electronic sounds; this is about creating sounds that are totally new to our ears. It will be like discovering a new colour. Remember when red was invented? Ha! These new techniques could lead to entirely new genres of music or sound design. So exciting!

In the future, sound might not just be something we listen to; it could become a more interactive and immersive part of our lives. We could have new ways to communicate, new forms of entertainment, and even new ways to heal – think sound therapy taken to a whole new level.

The possibilities are endless, and while we can’t predict exactly what will happen, one thing is for sure – the future of sound and audio is going to be an exciting ride.

in a Nutshell …

The science of sound reveals has a massive impact on the art of audio production. So, it’s not just pushing a button to record and play. So much more is happening under the bonnet! The best audio producers understand these concepts and push the boundaries of creativity, evoking emotions, telling stories, and creating immersive experiences.

Anyway, back to the “sound sphere” my manager used to bang on about. Turns out it doesn’t refer to a specific, widely recognized concept in audio or acoustics, but I think it was a term he used to encapsulate everything I’ve been banging on about above.